We welcome suggestions/bug fixes and donations of datasets/results.

This webpage provides supplementary materials to an experimental paper we submitted to VLDB conference 2008. Here you will find all the source code to our implementation, all the data sets we used in the experiments as well as additional experimental evaluation results.

DISCLAIMER: We have made the best effort to faithfully re-implement all the algorithms evaluated in this project, and compare them in the most possibly fair manner. The purpose of this project is to provide a consolidation of existing works on querying and mining time series, and to serve as a starting point for providing references and benchmarks for future research on time series. The algorithms are the intellectual property of the original authors.

Download Sourcecode and data sets:

The source code for the similarity measures and their pruning techniques are implemented in Java, and can be downloaded here. The data sets used for the accuracy comparison are provided by the UCR Time Series Repository, we are only hosting them here for the completeness of our project. While the paper is under review, the files are password protected by the paper ID.Additional materials:

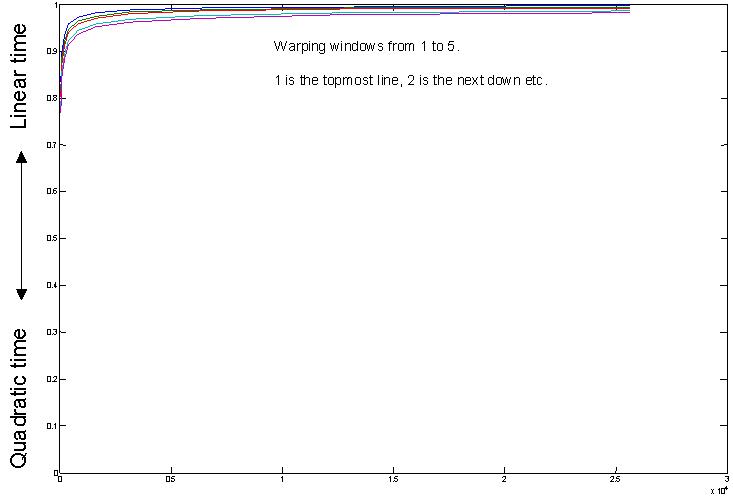

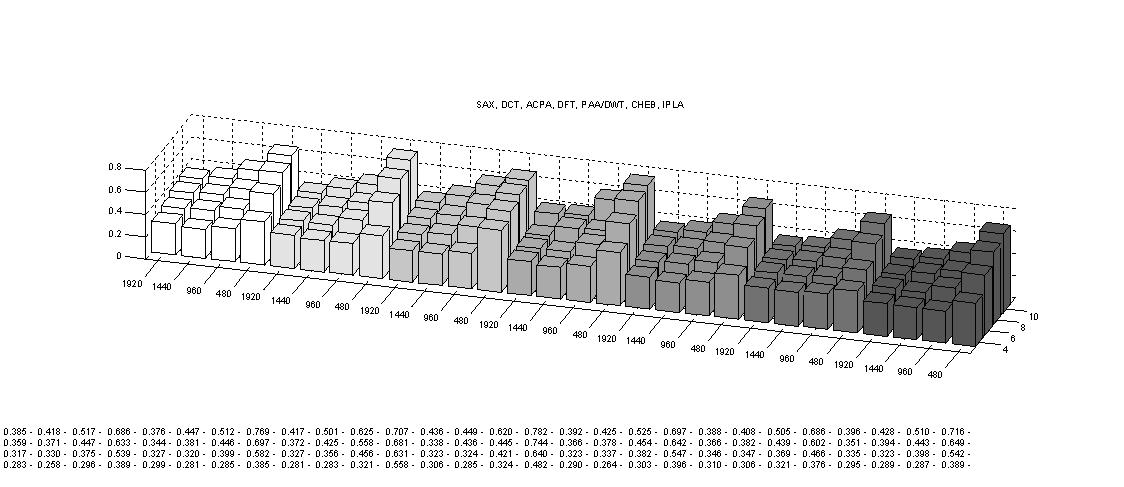

We show expanded and additional experiments that we could not fit into the paper.We claimed that the amortized time for constrained DTW gets better for large datasets. For large enough datasets, constrained DTW is about as fast as Euclidean distance. Lets test this. We searched increasingly large random walk datasets, of size [50, 100, 200, 400, 800, 1600, 3200, 6400, 12800, 25600] for the one nearest neighbor to a random query. Each time series was of length 128. (results are averaged over 100 queries) We tried every warping window size from 1 to 5. Recall that on most of the small datasets at the UCR archive, the best warping window size tends to be less than five, and experiments in this file show that the best warping window gets smaller as the data gets bigger. We measured the fraction of distance calculations that are pruned by a linear time lower bounding test (the LB_Keogh) which takes as long as Euclidean distance O(n). The results below show that, even for relatively small datasets with a few hundred items, at least 90% of the distance calculations are pruned, and we therefore only do the actual DTW less than 10% of the time. For large datasets, even with the most pessimistic warping window width, 98% of the DTW calculations are pruned.

These results do not include other optimizations that you can do, finding a good match early on helps,

because the best-so-far gets smaller more quickly and we can prune more.

So indexing or hashing to an approximate match, then doing the scan helps.

Also, the lower bound itself can use early abandoning [a].

Bottom Line: For sequential scanning, constrained DTW is about as fast as Euclidean distance for large datasets.

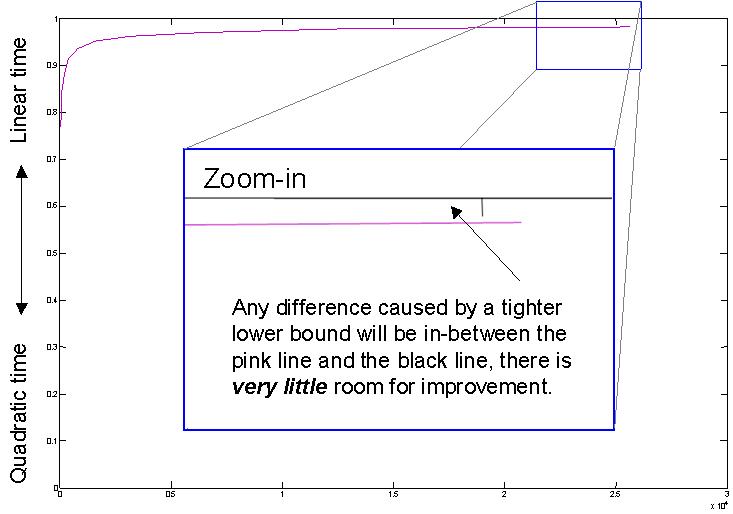

We can use the previous example to consider implications for tighter lower bounds. Occasionally there have been research efforts that attempt to create a tighter lower bound for DTW [b][c][d][e], could this help? Consider the figure below, which shows just the fraction of distance calculations pruned by LB_Keogh with a warping window of 5. Any improvement caused by a tighter lower bound will be in-between the pink line and the black line, there is very little room for improvement. In fact, some of the tighter lower bounds take more CPU time to compute, or they do not support early abandoning for the lower bound (see [a]) so they can be slower overall than the slightly weaker, but faster to compute lower bounds.

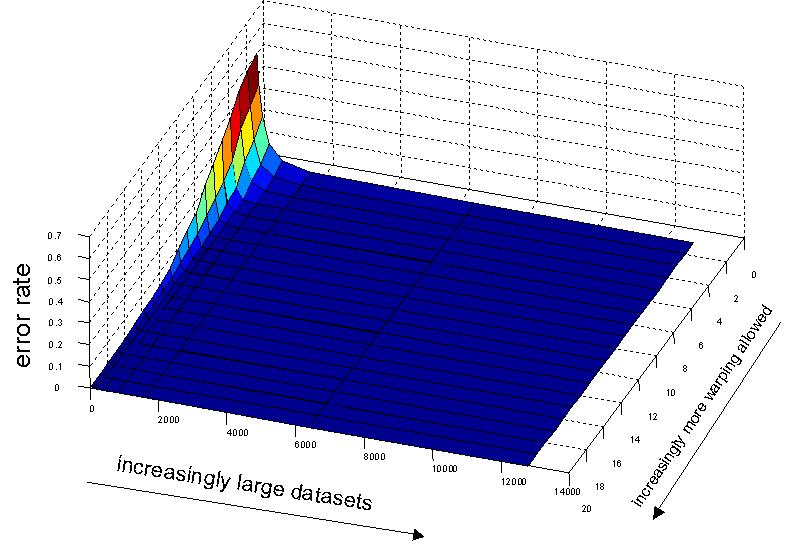

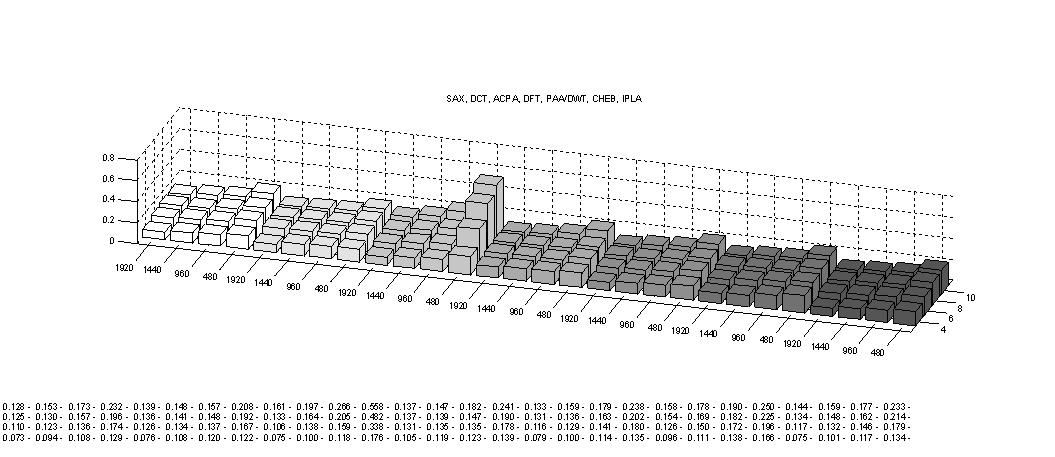

The next figure is an expanded version of a figure in our VLDB paper. We tested the error rate of nearest neighbor classification on the Two-Pattern dataset for the datasets sizes [50, 100, 200, 400, 800, 1600, 3,200, 6,400, 12,800] and over every DTW warping window width from 1 to 20. Recall that the case where DTW is 1 is the means it is the degenerate case of the Euclidean distance. What the figure shows is that if you have a small dataset, warping can help, and more warping is better up to some limit. However for larger datasets the effect diminishes. If you have a mere 2,000 objects, then Euclidean distance is as accurate at DTW. The take-home lessons are:

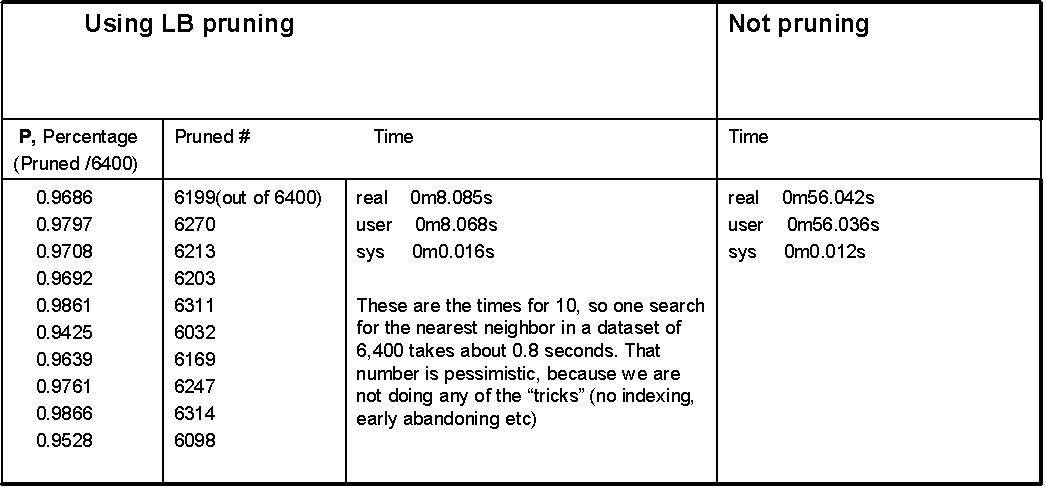

In the paper we note that the amortized cost of DTW on a large dataset is O((P * n) + (1-P nw)), where P is the fraction of the database pruned by a linear lower bound test. Below are the actual numbers for P on ten random queries. The queries where Two-Pat objects, but disjoint from the dataset searched (otherwise the results would be optimistic).

The next figure shows how we obtain the results for the Arbitrarily Naive Algorithm introduced in the appendix.

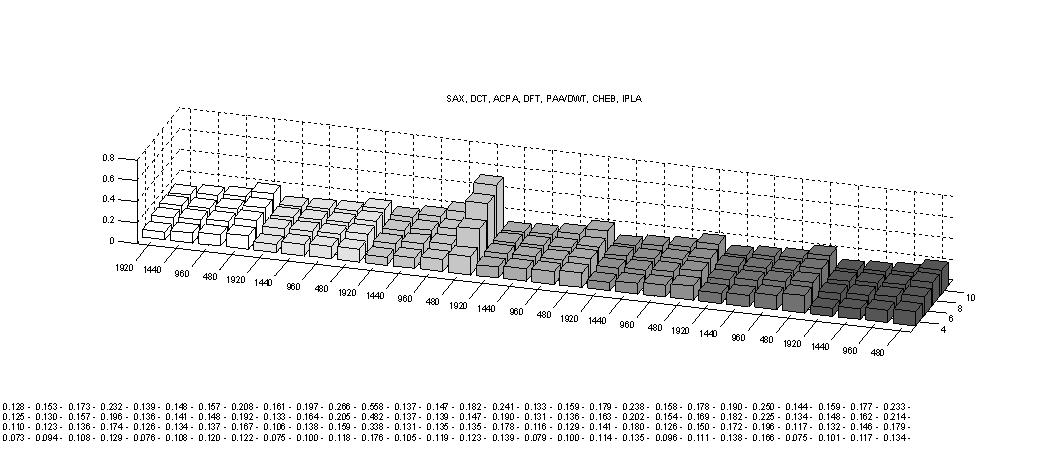

Finally, we show the full version of the three figures comparing the dimensionality reduction techniques in the paper.

First of all, the tightness of lower bounds for various time series representations on an ECG data set.

Next, the tightness of lower bounds for various time series representations on a relatively bursty data set

Third, The tightness of lower bounds for various time series representations on a periodic data set of tide levels

Additional information on comparing similarity measures:

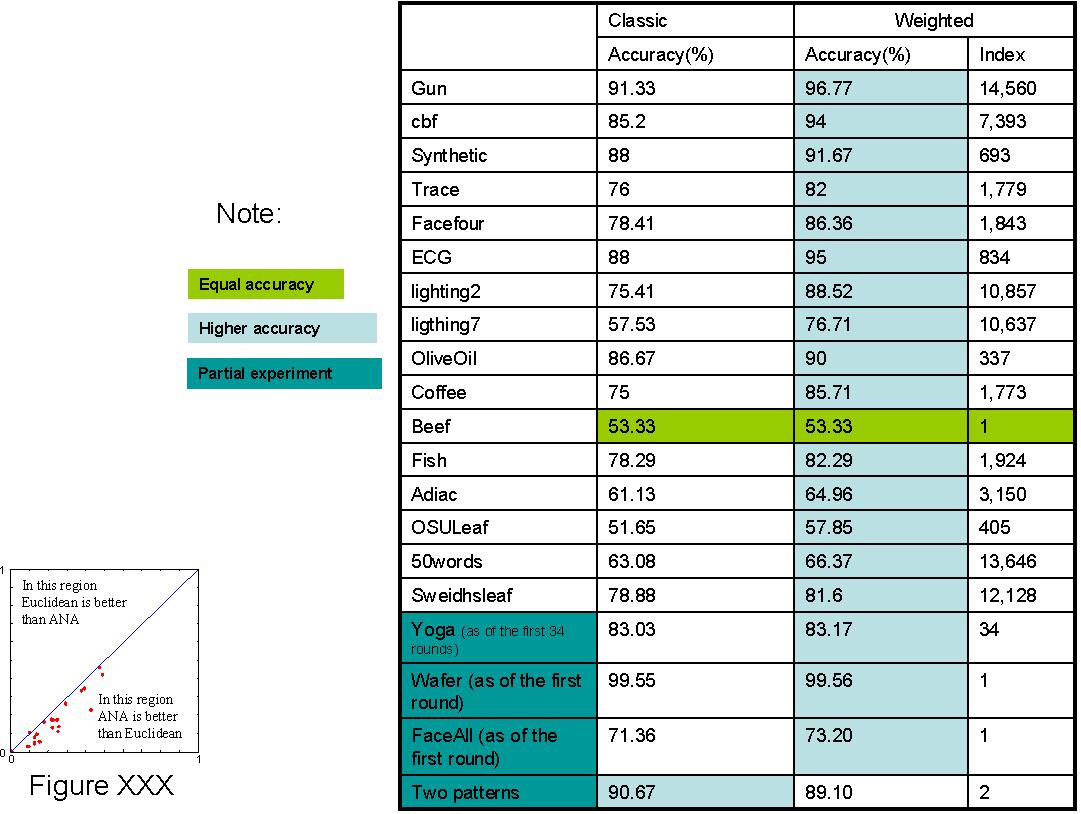

The experiments for similarity measures evaluates the accuracy of different distance measures proposed in the literature. We have re-implemented all the algorithms under a unified framework in Java, and compared them using a 1NN classifier as suggested here. Note that we use a randomized stratified cross validation rather than the random holdout method used there. This helps to reduce the bias and variance in the results. For more details on the classification algorithm, please refer to our paper. Table 1 shows the average classificaiton error ratios of the distance measures evaluated on all the data sets. Table 2 shows the variance of the cross validations on each data set for the individual distance measures.

| Data Set | total data size | data length | Training size | Testing Size | # of crosses | ED | L1-norm | L_inf-norm | DISSIM | TQuEST | DTW | DTW (w) | EDR | ERP | LCSS | LCSS(w) | Swale | Spade |

| 50words | 1355 | 270 | 271 | 1084 | 5 | 0.407226 | 0.378619 | 0.554677 | 0.3775458 | 0.525911 | 0.3749554 | 0.2907203 | 0.2713154 | 0.3405476 | 0.2979616 | 0.2791264 | 0.50048 | 0.340845 |

| Adiac | 781 | 176 | 156 | 625 | 5 | 0.464458 | 0.495048 | 0.42844 | 0.4974606 | 0.7183453 | 0.4651784 | 0.4455685 | 0.4570293 | 0.4356043 | 0.4336459 | 0.417847 | 0.9612152 | 0.437908 |

| Beef | 60 | 470 | 30 | 30 | 2 | 0.4 | 0.55 | 0.583333 | 0.55 | 0.6833333 | 0.433333 | 0.5833333 | 0.399651 | 0.5666667 | 0.4020491 | 0.5166667 | 0.6166667 | 0.5 |

| Car | 120 | 577 | 60 | 60 | 2 | 0.275 | 0.3 | 0.3 | 0.2166667 | 0.2666667 | 0.333333 | 0.2583333 | 0.3706035 | 0.1666667 | 0.2083333 | 0.35 | 0.75 | 0.25 |

| CBF | 930 | 128 | 60 | 870 | 16 | 0.0869219 | 0.040999 | 0.533993 | 0.0489427 | 0.1709197 | 0.0025253 | 0.005805 | 0.0126263 | 0 | 0.0166162 | 0.0147625 | 0.040404 | 0.04386 |

| chlorineconcentration | 4327 | 166 | 487 | 3840 | 9 | 0.349488 | 0.373781 | 0.3254 | 0.368033 | 0.4402746 | 0.379527 | 0.348222 | 0.3878872 | 0.3755934 | 0.373988 | 0.367643 | 0.7678035 | 0.439331 |

| cinc\_ECG\_torso | 1420 | 1639 | 48 | 1372 | 30 | 0.0511364 | 0.0438 | 0.180108 | 0.046007 | 0.0835438 | 0.165165 | hangzhou | 0.0113636 | 0.1445707 | 0.0568182 | 0.022727 | 0.1022727 | t60p |

| Coffee | 56 | 286 | 28 | 28 | 2 | 0.193487 | 0.246488 | 0.087484 | 0.1960409 | 0.4265645 | 0.190932 | 0.2515964 | 0.160281 | 0.2132822 | 0.213282 | 0.236909 | 0.4463602 | 0.185185 |

| diatomsizereduction | 322 | 345 | 32 | 290 | 10 | 0.022402 | 0.033312 | 0.019373 | 0.0264843 | 0.1609052 | 0.01515 | 0.0261406 | 0.0190831 | 0.0259402 | 0.0449727 | 0.084375 | 0.6889524 | 0.016393 |

| ECG200 | 200 | 96 | 40 | 160 | 5 | 0.162327 | 0.182441 | 0.174908 | 0.1601609 | 0.2659355 | 0.220672 | 0.152588 | 0.2107404 | 0.2126969 | 0.1706303 | 0.126402 | 0.5393065 | 0.25641 |

| ECGFiveDays | 884 | 136 | 34 | 850 | 26 | 0.1176471 | 0.106787 | 0.235023 | 0.1031222 | 0.1813725 | 0.153665 | 0.1223077 | 0.1105697 | 0.1274734 | 0.2318451 | 0.187414 | 0.4290183 | 0.264706 |

| FaceFour | 112 | 350 | 24 | 88 | 5 | 0.1487179 | 0.143587 | 0.420652 | 0.1716304 | 0.1435897 | 0.064348 | 0.1641304 | 0.0451087 | 0.0423077 | 0.1439424 | 0.0459783 | 0.3679487 | 0.25 |

| FacesUCR | 2250 | 131 | 200 | 2050 | 11 | 0.225088 | 0.192365 | 0.401285 | 0.2054775 | 0.288879 | 0.060398 | 0.0785324 | 0.0503316 | 0.0275378 | 0.0462489 | 0.0457213 | 0.1269173 | 0.315075 |

| fish | 350 | 463 | 70 | 280 | 5 | 0.3190476 | 0.292857 | 0.313571 | 0.3114286 | 0.4959697 | 0.3292857 | 0.2607143 | 0.1071429 | 0.2164286 | 0.0672871 | 0.16 | 0.8571429 | 0.15 |

| Gun\_Point | 200 | 150 | 40 | 160 | 5 | 0.14625 | 0.0925 | 0.18625 | 0.08375 | 0.175 | 0.14 | 0.055 | 0.0791667 | 0.1613636 | 0.0975 | 0.065 | 0.3458333 | 0.007 |

| Haptics | 463 | 1092 | 92 | 371 | 5 | 0.6190862 | 0.634078 | 0.631715 | 0.6397909 | 0.6694428 | 0.622433 | 0.59278 | 0.4660219 | 0.6008803 | 0.631141 | moonsis | 0.7675658 | t60p |

| InlineSkate | 650 | 1882 | 100 | 550 | 6 | 0.6647743 | 0.646037 | 0.714698 | 0.6496647 | 0.7572772 | 0.556604 | 0.6032117 | 0.5305818 | 0.4833333 | 0.5166667 | hangzhou | 0.7601415 | 0.643396 |

| ItalyPowerDemand | 1096 | 24 | 138 | 958 | 8 | 0.0397409 | 0.047312 | 0.043537 | 0.0427587 | 0.0892857 | 0.0668318 | 0.0553889 | 0.0751492 | 0.0497656 | 0.1002112 | 0.076267 | 0.1720238 | 0.233193 |

| Lighting2 | 121 | 637 | 24 | 97 | 5 | 0.340594 | 0.250532 | 0.388731 | 0.2613132 | 0.4437012 | 0.20417 | 0.3200532 | 0.087527 | 0.1903567 | 0.1985889 | 0.108018 | 0.2881828 | 0.271739 |

| Lighting7 | 143 | 319 | 70 | 73 | 2 | 0.377495 | 0.286106 | 0.566243 | 0.3003914 | 0.5025641 | 0.252153 | 0.2024462 | 0.0926392 | 0.2869863 | 0.282364 | 0.116134 | 0.5451076 | 0.557143 |

| MALLAT | 2400 | 1024 | 120 | 2280 | 20 | 0.0319444 | 0.041162 | 0.079364 | 0.0416886 | 0.0940351 | 0.0375 | 0.040351 | 0.0795833 | 0.0333333 | 0.0875 | miner-04! | 0.5333333 | 0.166667 |

| MedicalImages | 1141 | 99 | 228 | 913 | 5 | 0.3191853 | 0.322432 | 0.359997 | 0.3294933 | 0.4508373 | 0.286038 | 0.2806724 | 0.3598964 | 0.3090223 | 0.3493883 | 0.357306 | 0.496047 | 0.434152 |

| Motes | 1272 | 84 | 53 | 1219 | 24 | 0.110224 | 0.082373 | 0.239882 | 0.0798868 | 0.2111378 | 0.089614 | 0.1176868 | 0.0954576 | 0.1060345 | 0.0639257 | 0.076522 | 0.1119792 | 0.102843 |

| OliveOil | 60 | 570 | 30 | 30 | 2 | 0.1496107 | 0.235818 | 0.166852 | 0.2163515 | 0.298109 | 0.100111 | 0.118465 | 0.0623113 | 0.1323693 | 0.134594 | 0.054802 | 0.7169077 | 0.206897 |

| OSULeaf | 442 | 427 | 90 | 352 | 5 | 0.4477587 | 0.488411 | 0.520146 | 0.4744251 | 0.57061 | 0.400921 | 0.4242488 | 0.1147048 | 0.3653516 | 0.3594464 | 0.280925 | 0.4048281 | 0.212209 |

| plane | 210 | 144 | 35 | 175 | 6 | 0.051429 | 0.0371429 | 0.0328571 | 0.0419048 | 0.0380952 | 9.52E-04 | 0.032381 | 0.0014286 | 0.0095238 | 0.0161905 | 0.0619048 | 0.1809524 | 0.005714 |

| SonyAIBORobotSurfac | 621 | 70 | 40 | 581 | 16 | 0.080567 | 0.07565 | 0.10613 | 0.0879636 | 0.1350286 | 0.076924 | 0.0744755 | 0.0836683 | 0.0696093 | 0.228227 | 0.1545614 | 0.4220575 | 0.194737 |

| SonyAIBORobotSurfac | 980 | 65 | 80 | 900 | 12 | 0.094255 | 0.084119 | 0.134779 | 0.0709454 | 0.1864198 | 0.0795933 | 0.0828429 | 0.0921816 | 0.0618705 | 0.2381363 | 0.089041 | 0.2288247 | 0.32211 |

| StarLightCurves | 9236 | 1024 | 1000 | 8236 | 9 | 0.1424475 | 0.142551 | 0.151026 | 0.1424588 | 0.129765 | gehry | vanddrroh | 358-1 | 358-2 | 358-3 | 358-4 | jupiter | little-boy |

| SwedishLeaf | 1125 | 128 | 225 | 900 | 5 | 0.295333 | 0.286222 | 0.356889 | 0.2993333 | 0.3473333 | 0.256222 | 0.2213333 | 0.145111 | 0.1635556 | 0.1468889 | 0.148 | 0.6666667 | 0.2544 |

| Symbols | 1020 | 398 | 34 | 986 | 30 | 0.0876306 | 0.098154 | 0.151919 | 0.0928257 | 0.0778873 | 0.048668 | 0.0959469 | 0.0201903 | 0.0590449 | 0.053238 | 0.055293 | 0.1145013 | 0.017598 |

| synthetic\_control | 600 | 60 | 120 | 480 | 5 | 0.1425 | 0.14625 | 0.227083 | 0.1583333 | 0.6402778 | 0.01875 | 0.0141667 | 0.1180556 | 0.0345833 | 0.0595833 | 0.074583 | 0.1458333 | 0.15 |

| Trace | 200 | 275 | 40 | 160 | 5 | 0.3675 | 0.27875 | 0.445 | 0.28625 | 0.1583333 | 0.01625 | 0.075 | 0.15 | 0.0840625 | 0.118125 | 0.141562 | 0.2729167 | 0 |

| TwoLeadECG | 1162 | 82 | 46 | 1116 | 25 | 0.1291806 | 0.154474 | 0.150515 | 0.1631198 | 0.2660256 | 0.033134 | 0.0695266 | 0.0646059 | 0.071415 | 0.1461236 | 0.154046 | 0.282967 | 0.01721 |

| TwoPatterns | 5000 | 128 | 1000 | 4000 | 5 | 0.09515 | 0.039 | 0.797099 | 0.0364523 | 0.7473317 | 0 | 5E-05 | 0.00085 | 0.010413 | 0.00045 | 0.00015 | 0.0033308 | 0.051552 |

| wafer | 7174 | 152 | 1025 | 6149 | 7 | 0.005048 | 0.003699 | 0.020916 | 0.0045599 | 0.0135399 | 0.01499 | 0.0047691 | 0.0019514 | 0.005517 | 0.0040531 | 0.004492 | 0.1060776 | 0.018265 |

| WordsSynonyms | 905 | 270 | 181 | 724 | 5 | 0.3930436 | 0.374143 | 0.530082 | 0.3753381 | 0.5288119 | 0.370728 | 0.3150634 | 0.295271 | 0.3462732 | 0.2944248 | 0.280141 | 0.4994552 | 0.321789 |

| yoga | 3300 | 426 | 300 | 3000 | 11 | 0.16036 | 0.160818 | 0.180757 | 0.1668507 | 0.2158179 | 0.1510385 | 0.1513972 | 0.1121763 | 0.1326428 | 0.1085568 | 0.1344536 | 0.4301165 | 0.130435 |

| Data Set | # of series | data length | Training size | Testing Size | # of crosses | ED | L1-norm | L_inf-norm | DISSIM | TQuEST | DTW | DTW (w) | EDR | ERP | LCSS | LCSS(w) | Swale | Spade |

Notes:

How to get the source code and the data sets:

For Proprietary reasons, we ask you to contact us for the source code implementation. For the data sets, we ask you to contact Professor Eamonn Keogh.